They began experimenting with AI to separate sounds, including those in musical recordings, in the early 2000s. Engineers first tried to isolate a song’s vocals or guitars by adjusting the left and right channels in a stereo recording or fiddling with the equalizer settings to boost or cut certain frequencies. In 1953, the British cognitive scientist Colin Cherry coined the phrase “cocktail party effect” to describe the human ability to zero in on a single conversation in a crowded, noisy room. Sound source separation has long fascinated scientists. “We haven’t reached the same level with audio,” he says. program run by Facebook AI and France’s National Institute for Research in Computer Science and Automation (INRIA), says his goal is to make AI systems adept at recognizing the components of an audio source, just as they can now accurately separate out the different objects in a single photograph. Defossez recently published a research paper explaining his work, and he also released the code so that other AI researchers can experiment with and build on Demucs.ĭefossez, who is part of a Ph.D.

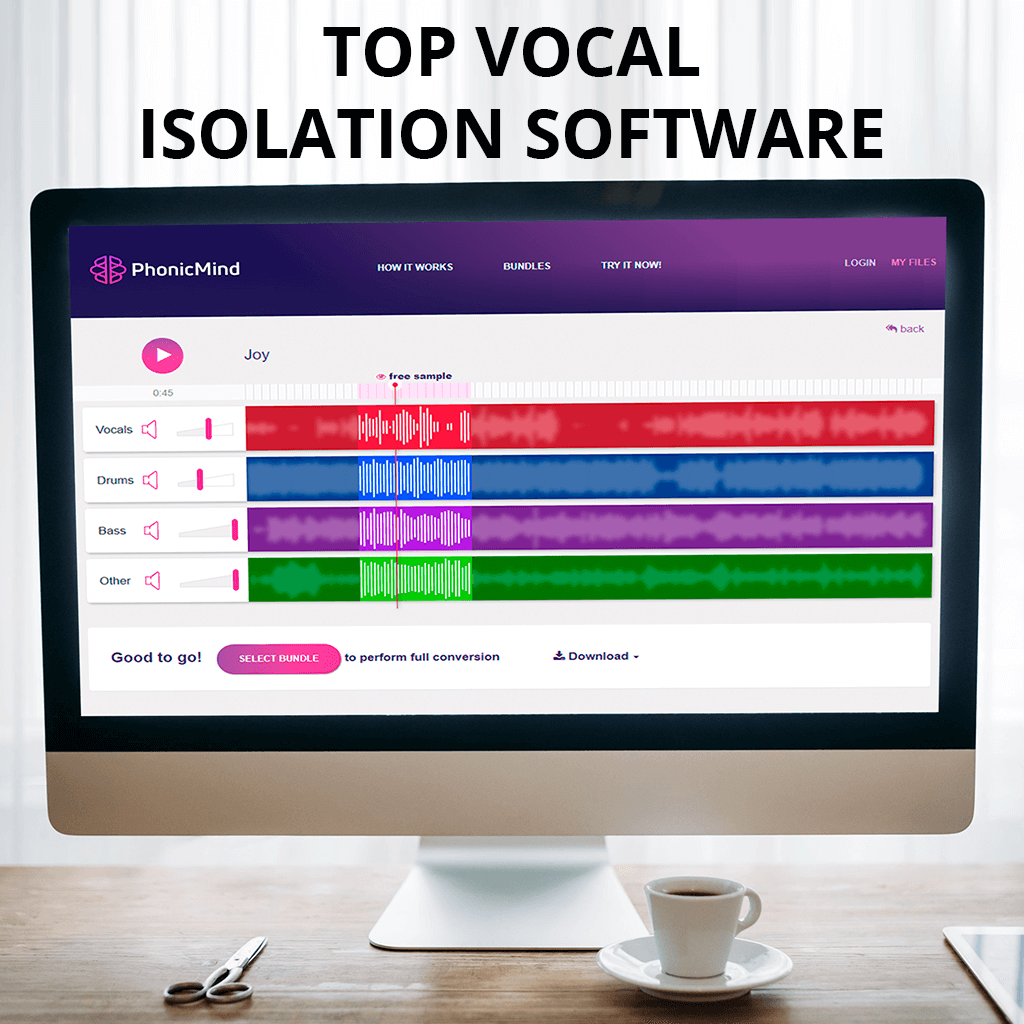

Defossez says technology like Demucs won’t just help musicians learn a tricky guitar riff or drum fill it could also one day make it easier for AI assistants to hear voice commands in a noisy room, and enhance technology such as hearing aids and noise-canceling headphones. It’s only a research project for now, but Defossez hopes it will have real-world benefits. Defossez’s system, called Demucs, works by detecting complex patterns in sound waves, building a high-level understanding of which waveform patterns belong to each instrument or voice, and then neatly separating them. A system created by Alexandre Defossez, a research scientist in Facebook AI’s Paris lab, uses AI to analyze a tune and then quickly split it into the various component tracks. Facebook’s AI researchers have developed a system that can do just that – with an uncanny level of accuracy.

0 kommentar(er)

0 kommentar(er)